Fall Detection on Embedded Platform Using Kinect and Wireless Accelerometer

Abstract

In this paper we demonstrate how to accomplish reliable fall detection on a low-cost embedded platform. The detection is achieved by a fuzzy inference system using Kinect and a wearable motion-sensing device that consists of accelerometer and gyroscope. The foreground objects are detected using depth images obtained by Kinect, which is able to extract such images in a room that is dark to our eyes. The system has been implemented on the PandaBoard ES and runs in real-time. It permits unobtrusive fall detection as well as preserves privacy of the user. The experimental results indicate high effectiveness of fall detection.

1 Introduction

Special needs and daily living assistance are often associated with seniors, disabled, the overweight and obese, etc. The special needs of the elderly may differ from that of an obese person or an overweight individual, but they all have special needs and often require some assistance to perform their daily routines. Assistive technology or adaptive technology (AT) is an umbrella term that encompasses assistive, adaptive, and rehabilitative devices for people with special needs [6]. Assistive technology for ageing-at-home has become a hot research topic since it has big social and commercial value. One important aim of assistive technology is to allow elderly people to stay as long as possible in their home or familiar environment without changing their living style. Even though physical activity is essential in the prevention of disease and enhancing the quality of life, falls frequently happen during walking and various forms of physical activity.

Falls are major causes of mortality and morbidity in the elderly. Many research findings show that high percentage of injury-related hospitalizations for seniors are the results of falls [5]. Thus, fall detection has become one of hot research problems in assistive technology as it can contribute toward independent living of the elderly. The goal of fall detection technology is to detect the fall occurrence as soon as possible and to generate an alert. Many efforts have been undertaken to develop technology permitting human fall detection [10]]. They were inspired by the large demand and the considerable value of the fall detection market. However, despite many efforts undertaken to achieve reliable fall detection, the existing technology does not meet the requirements of the users with special needs [13]].

Most proposed systems to fall detection are based on a wearable device that monitor the movements of an individual, recognize a fall and trigger an alarm. Prevalent methods only utilize accelerometers or both accelerometers and gyro-scopes to separate fall from activities of daily living (ADLs) [10]]. As a result, it is not easy to distinguish real falls from fall-like activities [2][7]. Several ADLs like fast sitting have similar kinematic motion patterns with real falls and in consequence such methods might trigger many false alarms. Moreover, in [4] the authors point out that the common fall detectors, which are usually attached to a belt around the hip, are inadequate to be worn during the sleep and this results in the lack of ability of such detectors to monitor the critical phase of getting up from the bed. In general, the solutions mentioned above are somehow intrusive for people as they require wearing continuously at least one device or smart sensor.

There have been several attempts to attain reliable human fall detection using single CCD camera [1][11], multiple cameras [3] or specialized omni-directional ones [9]]. The currently offered CCD-camera based solutions require time for installation, camera calibration and they are not generally cheap. Typically, they require a PC computer or a notebook for image processing. The existing video-based devices for fall detection cannot work in nightlight or low light conditions. In addition, in most of such solutions the privacy is not preserved adequately. Video cameras offer several advantages in fall detection, among others the ability to detect various activities. Additional advantage is low intrusiveness and the possibility of remote verification of fall events. However, the lack of depth information may lead to many false alarms.

2 Primary Challenges and Proposed Solution

The existing technology permits reaching quite high performance of fall detection. However, as mentioned above it does not meet the requirements of the users with special needs. Our literature survey show that most of the approaches offers incremental improvements that can not lead to technology breakthrough, and which have insufficient potential for cutting edge scientific breakthroughs to make the life of people with special needs more fulfilling. Our work brings new insight into fall detection by the use of a wireless wearable device and Kinect, which is a central component of our system for fall detection.

The Kinect is a revolutionary motion-sensing technology that allows tracking a person in real-time without having to carry sensors. Unlike 2D cameras, Kinect allows tracking the body movements in 3D. It is the world’s first sys-tem that combines an RGB camera and depth sensor. In order to achieve reliable and unobtrusive fall detection, our system employs both the Kinect and a wearable motion-sensing device, which complement each other. The fall detection is done by a fuzzy inference system using low-cost Kinect and the wearable motion-sensing device consisting of an accelerometer and a gyroscope. The fuzzy inference system is a central ingredient of our fall detection prototype, and it is based on expert knowledge and demonstrates high generalization abilities [8]. We show that the low-cost Kinect contributes toward reliable fall detections. Using both devices, our system can reliably distinguish the falls from activities of daily living, and thus the number of false alarms is reduced. In context of fall detection the disadvantage of Kinect is that it only can monitor restricted areas. In the areas where the depth images are not available we utilized only a wearable motion-sensing device consisting of an accelerometer and a gyroscope. On the other hand, in some ADLs during which the use of this wearable sensor might not be comfortable, for instance during changing clothes, wash, etc., the system relies on Kinect camera only. An advantage of Kinect is that it can be put in selected places according to the user requirements. Moreover, the system operates on depth images and thus preserves privacy for people being monitored. In this context, it is worth noting that Kinect uses infrared light and therefore it is able to extract depth images in a room that is dark to our eyes. The system runs in real-time and has been implemented on the PandaBoard ES, which is a low-power, low-cost single-board computer development platform.

3 The System for Fall Detection

This section is devoted to presentation of the main modules of the embedded sys-tem for fall detection. At the beginning the system architecture will be outlined. The wearable device will be presented later. Then, the usefulness of the Kinect for fall detection is discussed in detail. Afterwards, the extraction of the object of interest in depth images on the computer board with limited computational resources is presented.

3.1 Main Modules of the Embedded System

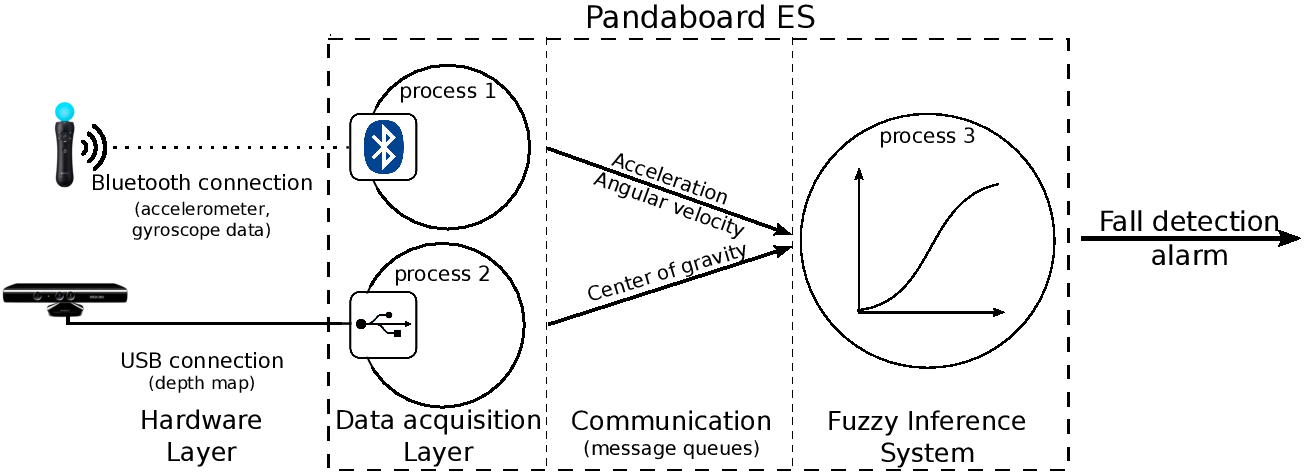

Our fall detection system uses both data from Kinect and motion data from a wearable smart device containing accelerometer and gyroscope sensors. Data from the smart device (Sony PlayStation Move) are transmitted wirelessly via Bluetooth to the PandaBoard on which the signal processing is done, whereas Kinect is connected via USB, see Fig. 1. The system runs under Linux operating system. Linux provides various flexible inter-process communication methods, among others message queues. Message queues provide asynchronous communication that is managed by Linux kernel. Message queues are appropriate choice for well structured data. Our application consists of three concurrent processes that communicate via message queues, see Fig. 1. The first process is responsible for acquiring data from the wearable device, the second process acquires depth data from the Kinect, whereas the third one is responsible for processing data and triggering the alarm.

The algorithm runs on PandaBoard ES, which is a mobile development plat-form and features a dual-core 1 GHz ARM Cortex-A9 MPCore CPU with Sym-metric Multiprocessing (SMP), a 304 MHz PowerVR SGX540 integrated 3D graphics accelerator, a programmable C64x DSP, and 1 GB of DDR2 SDRAM. The board includes wired 10/100 Ethernet as well as wireless Ethernet and Blue-tooth connectivity.

3.2 The Wearable Device

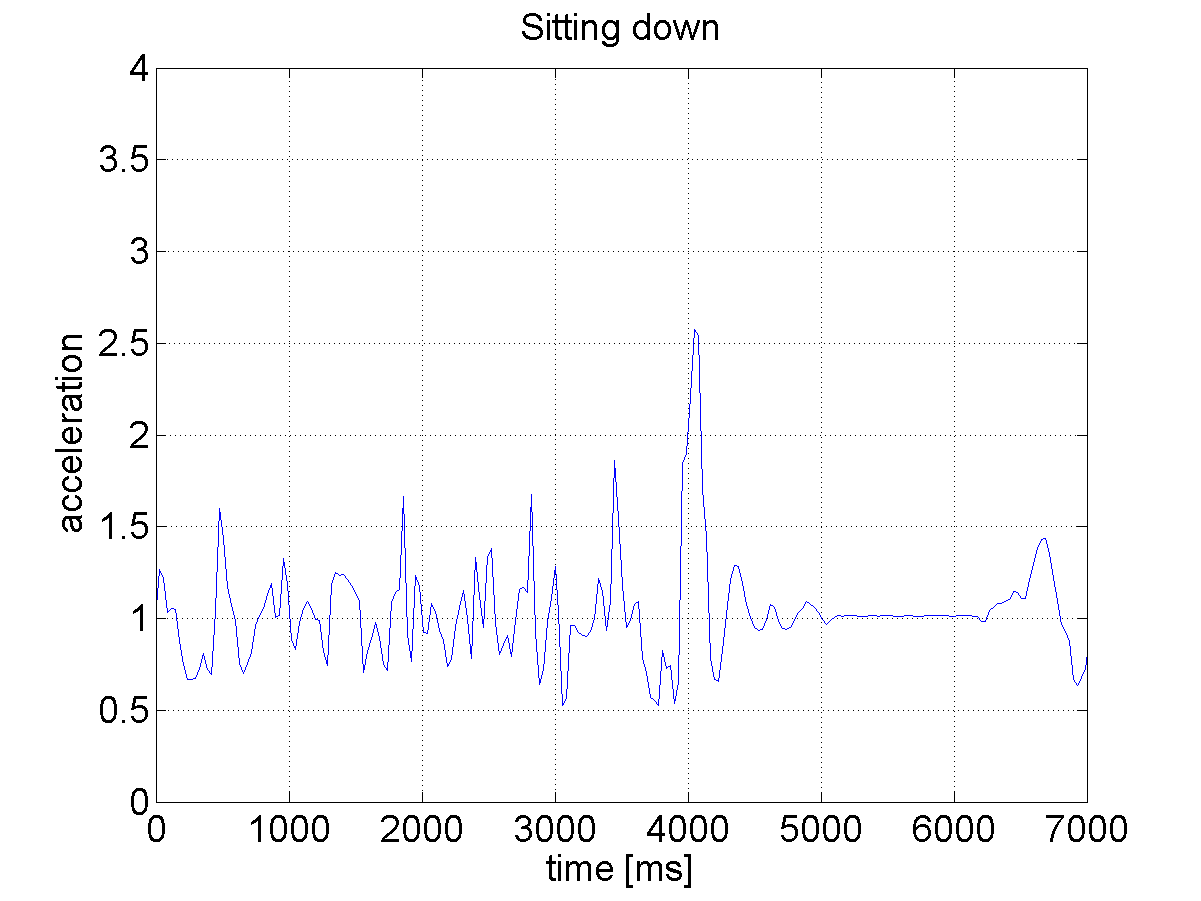

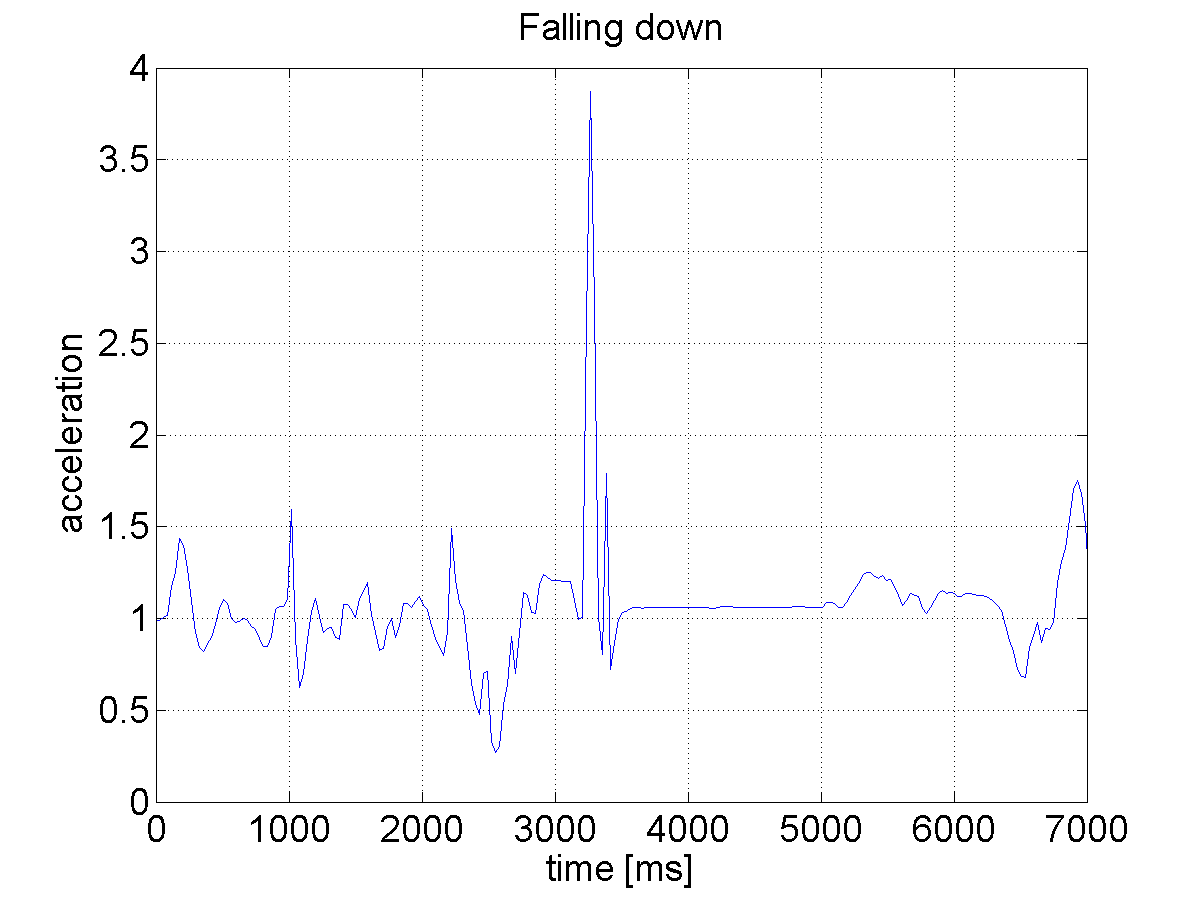

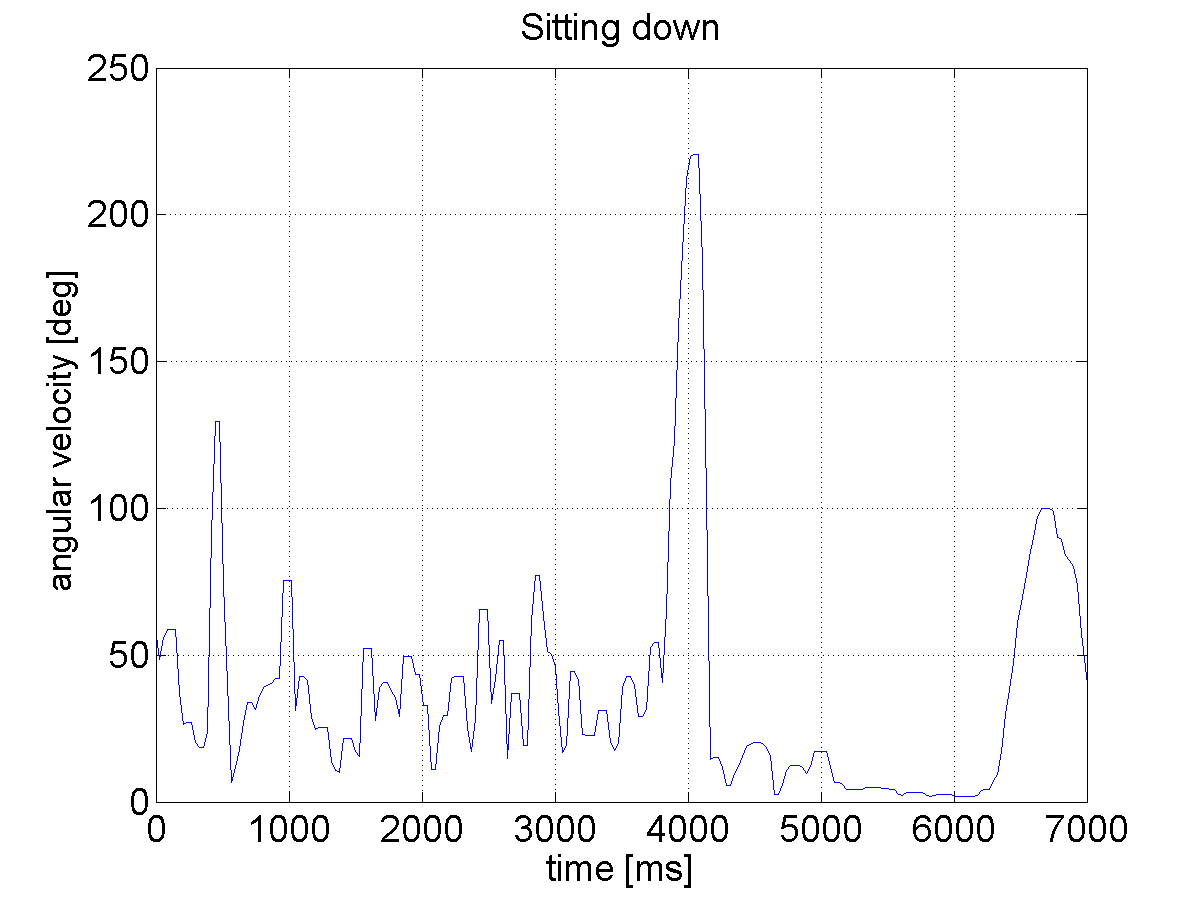

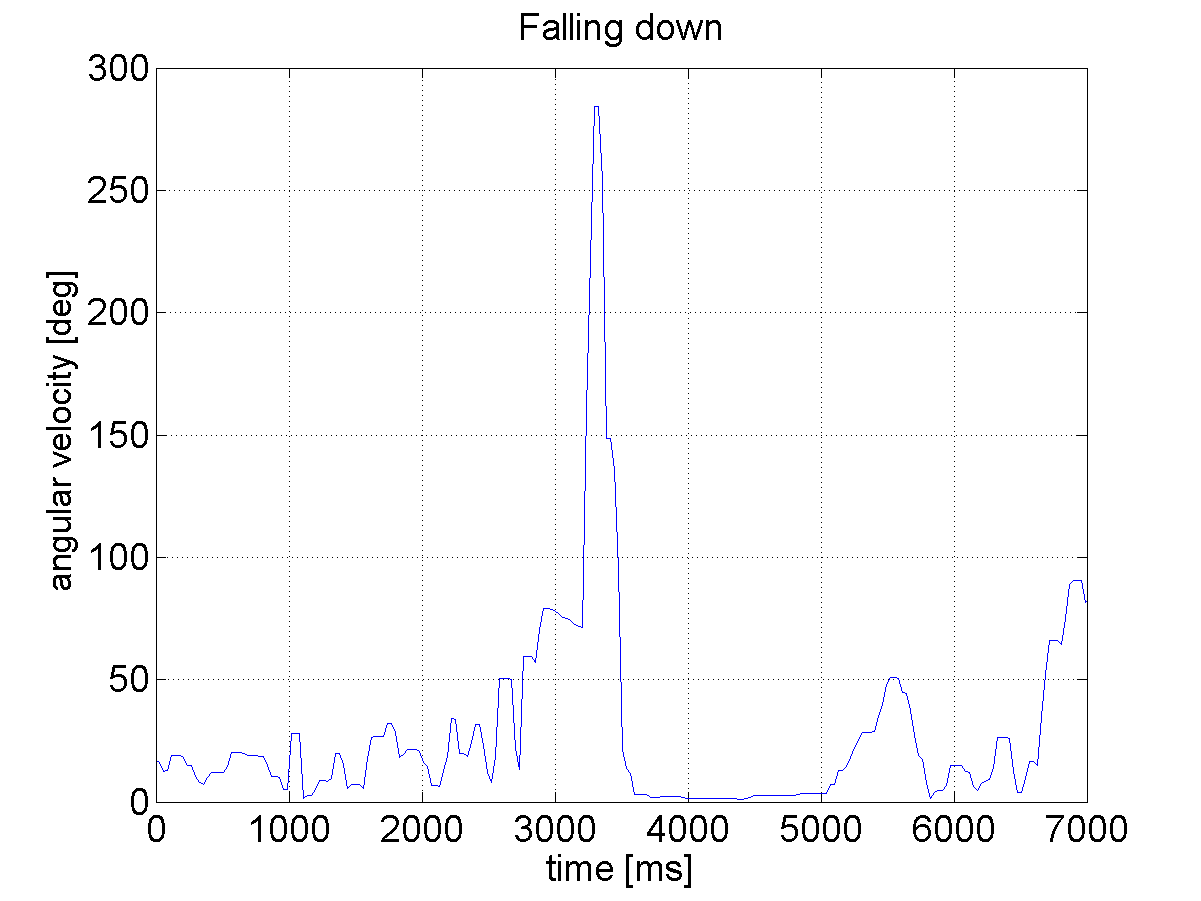

The wearable device contains one tri-axial accelerometer and a tri-axial gyro-scope that consists of a dual-axis gyroscope and a Z-axis gyroscope. The first sensor measures acceleration, the rate of change in velocity across time, whereas the second one measures the rate of rotation. The acceleration is measured in units of g, where 1 g corresponds to the vertical acceleration force due to gravity. The smart sensor delivers the measurements along three axes together with the corresponding time stamps. The sampling rate of both sensors is equal to 60 Hz. The measured acceleration signals were median filtered with a window length of three samples to suppress the noise and then used to calculate the acceleration’s vector length. Figure 2 depicts the plots of acceleration and angular velocities readings vs. time for simulated falling and sitting down. As illustrated on Fig. 2, the acceleration and the angular velocity are rapidly changed when people fall. As can bee seen, the motion patterns of falling and sitting down are quite similar. Therefore, in order to reduce the false positives we employ a fuzzy inference system using both data from the wearable device and the Kinect. The depicted plots were obtained for the device that was worn near the pelvis region. It is worth noting that the attachment of the wearable sensor near the pelvis region or lower back is recommended because such body parts represent the major component of body mass and move with most activities [7].

|

|

|

|

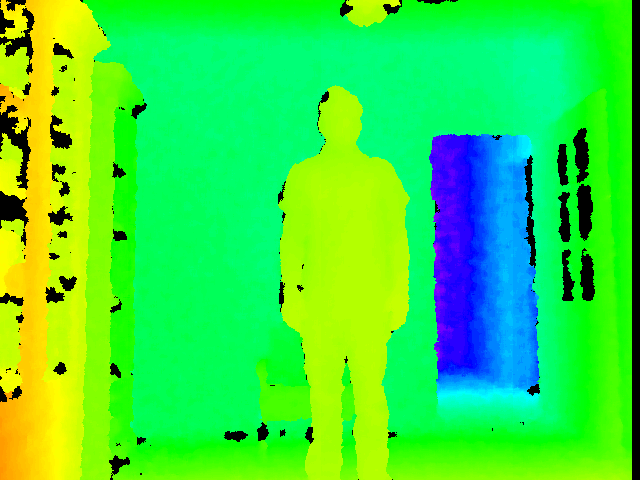

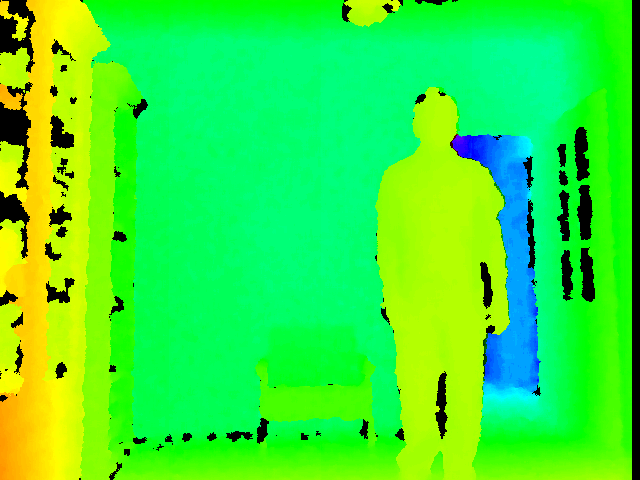

3.3 Depth Images

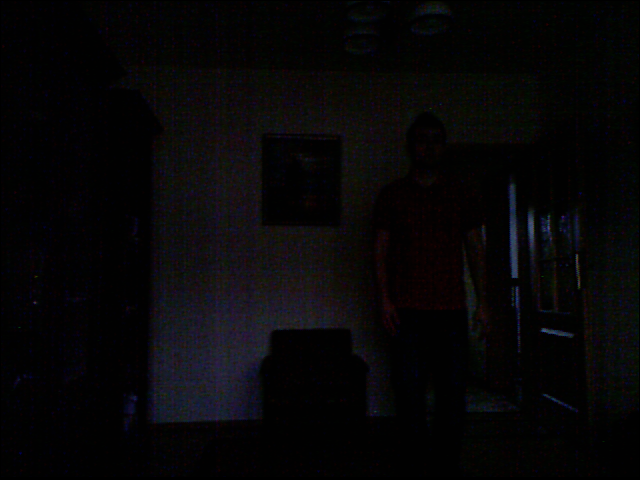

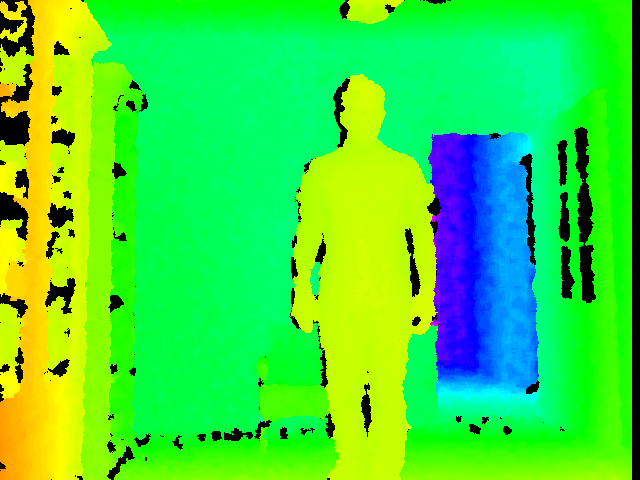

The Kinect sensor simultaneously captures depth and color images at a frame rate of about 30 fps. The Kinect sensor consists of an infrared laser-based IR emitter, an infrared camera and an RGB camera. The IR camera and the IR projector form a stereo pair with a baseline of approximately 75 mm. Kinect’s field of view is fifty-seven degrees horizontally and forty-three degrees vertically. The minimum range for the Kinect is about 0.6 m and the maximum range is somewhere between 4-5 m. The device projects a speckle pattern onto the scene and infers depth from the deformation of that pattern. In order to determine the depth it combines such a structured light technique with two classic computer vision techniques, namely depth from focus and depth from stereo. Pixels in the provided depth images indicate calibrated depth in the scene. The depth resolution is about 1 cm at 2 m distance. The depth map is supplied in VGA resolution (640 480 pixels) on 11 bits (2048 levels of sensitivity). Figure 3 depicts sample color and the corresponding depth images, which were shot by Kinect in various lighting conditions, ranging from the day to late evening. As

|

|

|

|

|

|

|

|

we can observe, owing to the ability of Kinect to extract the depth images in unlit or dark rooms the fall detection can be done in the late evening or even in the night.

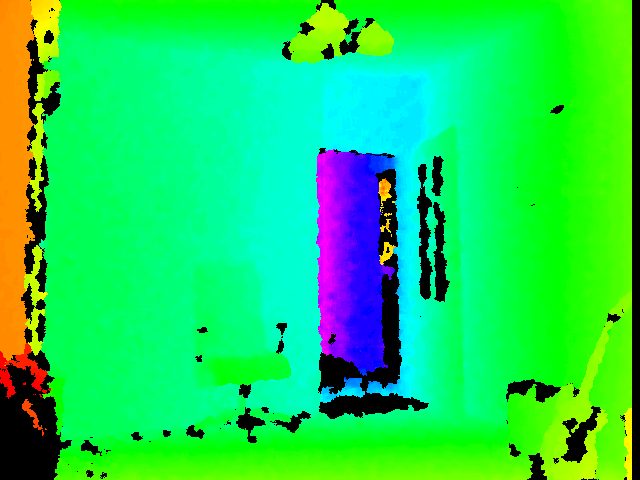

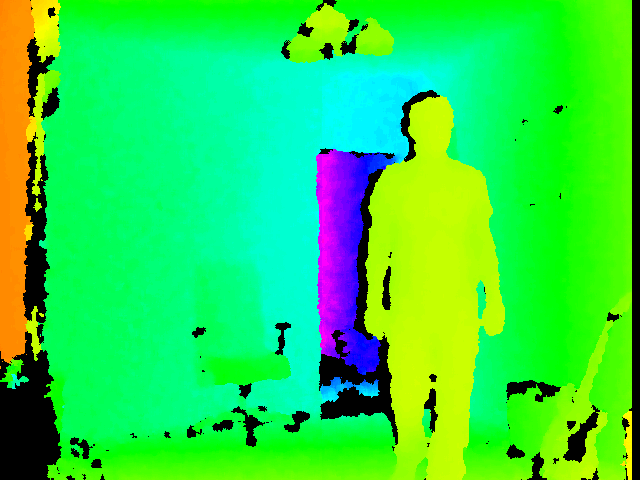

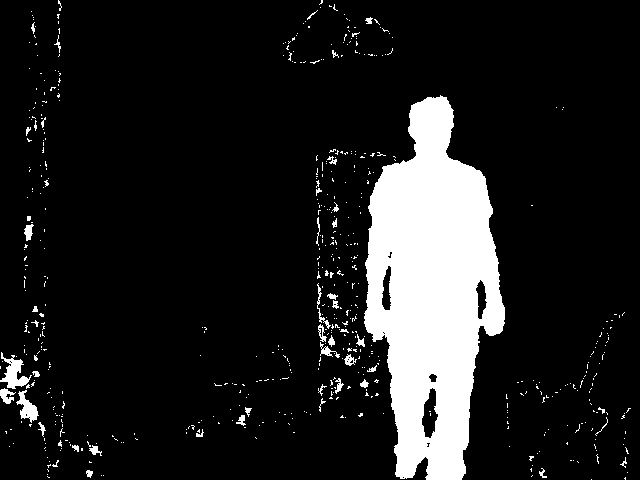

3.4 Extraction of the Object of Interest

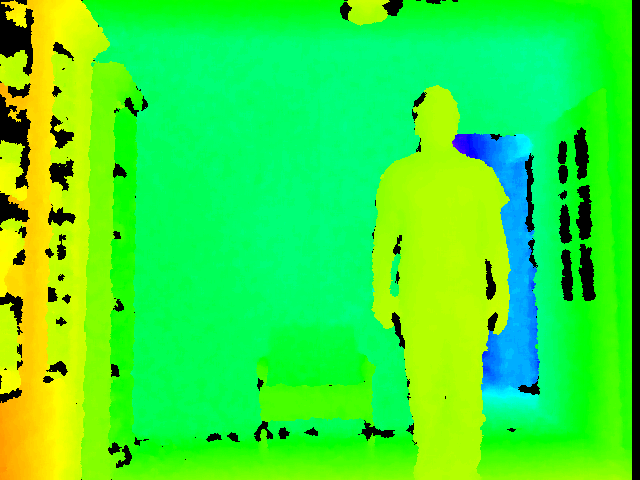

The depth images ware acquired using OpenNI (Open Natural Interaction) library22Available at: http://www.openni.org/. OpenNI framework supplies an application programming interface (API) as well as it provides the interface for physical devices and for middleware components. In order to extract the foreground object a mean depth map was extracted in advance. It was extracted on the basis of several consecutive depth images without the subject to be monitored and then it was stored for the later use in the detection mode. In the detection mode the foreground objects ware extracted through differencing the current image from such a reference depth map. Afterwards, the foreground object was determined through extracting the largest connected component in the thresholded difference map. Finally, the center of gravity of the object of interest was calculated. The reference map-based extraction of the foreground object has been selected due to reduced computer computational resources of the PandaBoard. The code profiler reported about 50% CPU usage by the module responsible for detection of the foreground object. Figure 4 illustrates the extraction of the object of interest in the depth image.

a) b)

b) c)

c) d)

d)

3.5 Fuzzy Inference Engine

The fall alarm is triggered by a fuzzy inference engine based on expert knowledge, which is declared explicitly by fuzzy rules and sets. As inputs the engine takes the acceleration, the angular velocity and the distance of the person’s gravity center to the altitude at which the Kinect is placed. The acceleration’s vector length is calculated using data provided by the tri-axial accelerometer, whereas the angular velocity is provided by the gyroscope. A fuzzy inference system proposed by Takagi and Sugeno (TS) [12]] is utilized to generate the fall alarm. It expresses human expert knowledge and experience by using fuzzy inference rules represented in statements. In such an inference system the linear submodels associated with TS rules are combined to describe the global behavior of the nonlinear system. The inference is done by the TS fuzzy system consisting of 27 rules [8]. The filtered data from the accelerometer and the gyroscope were interpolated and decimated as well as synchronized with the data from Kinect, i.e. the center of gravity of the moving person.

4 Experimental Results

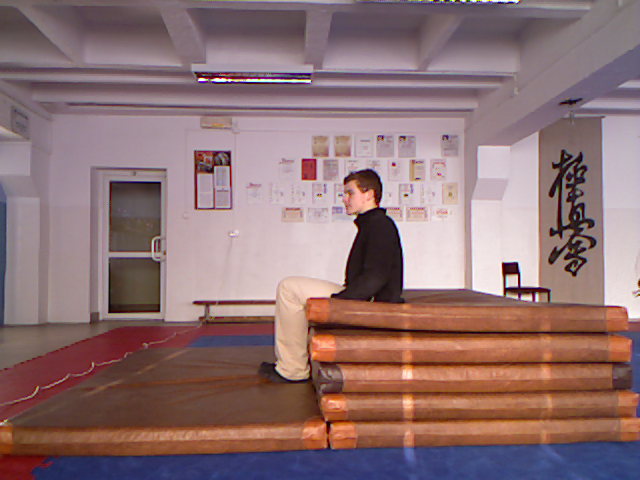

Five volunteers with age over 26 years attended in evaluation of our developed algorithm and the system. Intentional falls were performed in home by four persons towards a carpet with thickness of 2 cm and in a gym, see Fig. 5, towards mattress with thickness of 10 cm. The accelerometer was worn near the pelvis. Each individual performed three types of falls, namely forward, backward and lateral at least three times. Each individual performed also ADLs like walking, sitting, crouching down, leaning down/picking up objects from the floor, lying on a bed. All intentional falls performed in home towards the carpet were detected correctly. In particular, sitting down fast, which is not easily distinguishable from a typical fall when only an accelerometer or even an accelerometer and a gyroscope are used, was detected quite reliably by our system, see results in Tab. 1. The system correctly detected seventeen falls of the eighteen falls in the gym towards the mattress. Slightly smaller detection rate is due to larger thickness of the mattress than the carpet.

|

|

|

|

| fall | sitting down | crouching down | walking | lying in a bed | picking up objects |

| 12/12 | 23/25 | 23/25 | 25/25 | 12/12 | 25/25 |

5 Conclusions

In this paper we demonstrated how to achieve reliable fall detection on an embedded platform. The detection was done by fuzzy inference system using Kinect, accelerometer and gyroscope. The system runs on low-cost PandaBoard ES. It permits unobtrusive fall detection as well as preserves privacy of the user. The results show that a single accelerometer with gyroscope and Kinect are completely sufficient to implement low-cost system for reliable fall detection.

Acknowledgments.

This work has been supported by the National Science Centre (NCN) within the project N N516 483240.

References

- [1] (2006) Recognizing falls from silhouettes. pp. 6388–6391. Cited by: 1.

- [2] (2007) Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait & Posture 26 (2), pp. 194–199. Cited by: 1.

- [3] (2007) A multi-camera vision system for fall detection and alarm generation. Expert Systems 24 (5), pp. 334–345. Cited by: 1.

- [4] (2003) SPEEDY: a fall detector in a wrist watch. ISWC ’03, Washington, DC, USA, pp. 184–187. Cited by: 1.

- [5] (2010) Cost of falls in old age: a systematic review. Osteoporosis Int. 21, pp. 891–902. Cited by: 1.

- [6] (2002) Assistive technologies principles and practice. Mosby, 2nd edn.. Cited by: 1.

- [7] (2008) Comparison of low-complexity fall detection algorithms for body attached accelerometers. Gait & Posture 28 (2), pp. 285–291. Cited by: 1, 3.2.

- [8] (2012-04) Fuzzy inference-based reliable fall detection using Kinect and accelerometer. The 11th Int. Conf. on Artificial Intell. and Soft Computing, LNCS, vol. 7267, Springer, pp. 266–273. Cited by: 2.

- [9] (2006) A customized human fall detection system using omni-camera images and personal information. Distributed Diagnosis and Home Healthcare, pp. 39–42. Cited by: 1.

- [10] (2007-08) Fall detection - principles and methods. pp. 1663–1666. Cited by: 1, 1.

- [11] (2006-08) Monocular 3d head tracking to detect falls of elderly people. pp. 6384–6387. Cited by: 1.

- [12] (1985) Fuzzy Identification of Systems and its Applications to Modeling and Control. IEEE Trans. on SMC 15 (1), pp. 116–132. Cited by: 3.5.

- [13] (2008) Approaches and principles of fall detection for elderly and patient. Vol. , pp. 42–47. Cited by: 1.