ACADEMIC WEBSITE - ADRIAN HORZYK, PhD DSc

|

ACADEMIC WEBSITE - ADRIAN HORZYK, PhD DSc |

|

AGH University of Science and Technology in Cracow, Poland | ||

SUBJECTS |

KNOWLEDGE AND ASSOCIATIONSKnowledgeKnowledge forms automatically in brains of living creatures. It can develop, expand, specify, change, and be verified during one’s life-time. Individual knowledge is a limited reflection of reality in the associative neural system. Knowledge is a simplified projection and aggregation of objects, facts, rules, algorithms, and their various associations. Brain structures represent classes of objects rather than individual objects. The frequently repeated features of similar objects are remembered and used as definition for such classes. Knowledge is indispensable for intelligent behaviors and intelligence at all. Knowledge allows us to perform reasoning and thinking processes. Knowledge is limited by perception systems of individuals because knowledge is formed after aquired signals and their combinations. Knowledge acquisition is natural for humans but not for machines... Knowledge is usually defined as awareness or a familiarity with someone or something, which can include facts, information, descriptions, and skills acquired through experience or education. Computer science defines knowledge as a set of facts and rules stored and exploited using databases and expert systems or an inner configuration of a neural network that has been created in a training process for a given set of training samples. Human knowledge fails to work as a relational database because it requires fast and direct neuron communication to consolidate fact and rules and quickly recall associations. This is because relational databases have to loop and search for specific data evaluating various formulas or equations. Moreover, brains of living creatures have no suitable structures for storing data tables that are currently commonly used in today’s computer systems. People use their knowledge and intelligence to perform various actions and reactions to satisfy a variety of needs, purposes, and goals that usually reflect their needs. Knowledge together with intelligence allow people to behave intentionally and act efficiently. Knowledge is quickly available thanks to associations of learned facts, rules, their combinations, and sequences that are consolidated, available for us, and can be recalled in the future. There is necessary a suitably rich context to recall consolidated associations and remembered facts or rules. It has been already proofed that learning foreign languages is much faster if students learn whole phrases instead of single words. Learning sequences of words or actions contains richer context for association and next recalling, and thus is more efficient. You have only rough data, but no knowledge about something if you have to loop data, search them through, or evaluate formulas or equations. Knowledge enables instantaneous reasoning based on consolidated facts and rules in the past. Human knowledge is always subjective and limited to the sensations, experiences, collected informations, and intelligence. Each human perceives situations and surroundings in view of his or her knowledge. One's knowledge is very personal and cannot be easy transmited or made available to other people. One's knowledge is closely connected to the neural parameters and structure of his or her brain. The only known way of sharing one's knowledge is to ask him or her about interesting facts and receive answers that are produced after formed associations in one's brain. This kind of sharing makes available some pieces of information that comes from one's knowledge, but not the knowledge itself! Knowledge EngineeringKnowledge engineering (KE) is an engineering discipline that involves integrating knowledge into computer systems in order to solve complex problems normally requiring a high level of human expertise. Knowledge engineering was defined in 1983 by Edward Feigenbaum and Pamela McCorduck. Knowledge engineering is key to solving some of the fundamental challenges of today’s computer science. It facilitates more efficient and intelligent computer systems and further development of artificial intelligence. Classical knowledge representation techniques try to hierarchically represent data using:

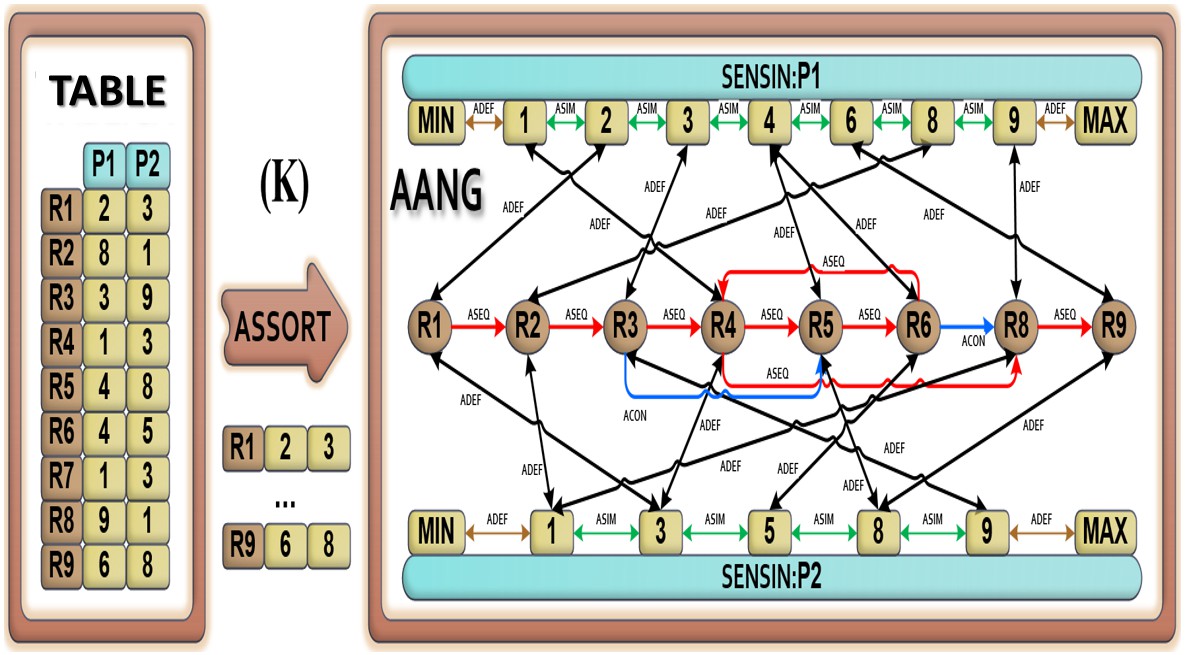

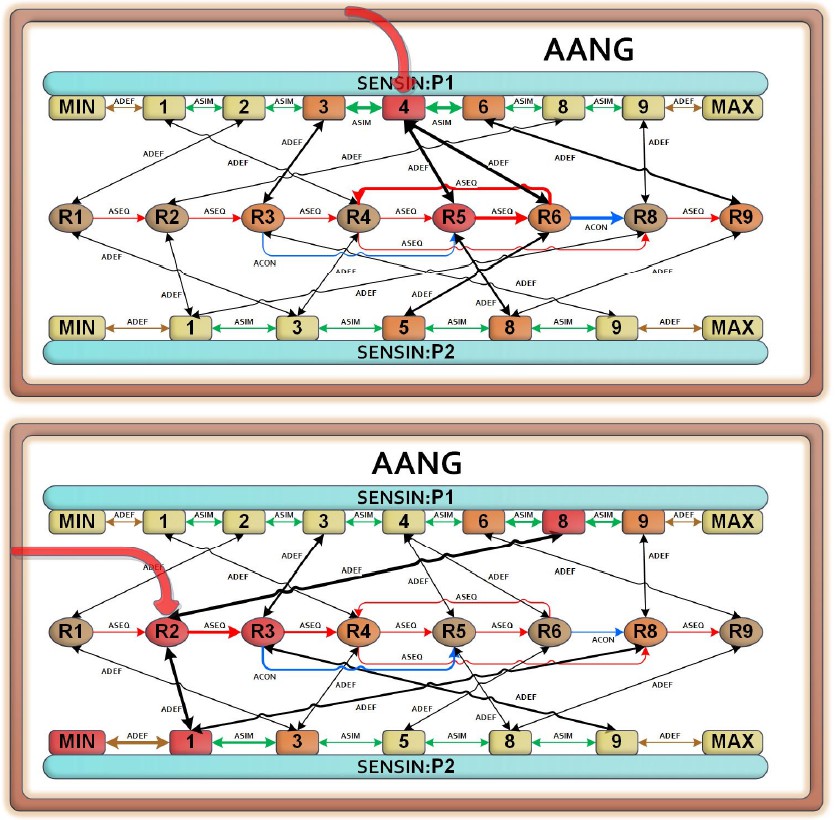

All these solutions, rules, and data structures require to search them through and use a kind of logic, equations, and conditions to extract usefull information from collected data. AssociationsThe question is how to enable the computer system to actively consolidate facts and rules to be able to quickly recall or generalize them? Can you explain your thinking or reasoning processes? Do you know why you can so quickly recall even intricated things, procedures, or mechanisms? Can you clear up why you cannot sometimes recall something? The human brain is an intricate associative machine that can learn various kinds of associations and instantaneously recall them if an appropriate context is given. It can be easy adapted to learn something only if it can be defined by known objects and differs from the other known objects. Moreover, it can remember many sequences of objects or actions. It enables us to construct rules, sequences, sentences, and algorithms. Each rule can be also easy recalled only if the suitable context occurs and is recognized. The recognition of the context is enough to start the recall sequence of associations. Many associated and consolidated rules, sequences, sentences, and algorithms allow us to do small changes in the recalled sequences. It depends on variety of consolidated rules, sequences, sentences, algorithms, their similarities, and the context of their recalling. If the context is new the recalled sequences can change after it, producing new creative behavior or a statement. The associative processes in living creature brains incorporate new facts and rules into the others already associated. We will try to model these processes in artificial associative systems. Artificial Associative SystemsArtificial Associative Systems (AAS) enable us to construct the computational systems that are based on associated objects, facts, and rules in the specific graph structure (AGDS). They are a special kind of neural networks that are constructed from the associative neurons (as-neurons), receptors, effectors, sensorial input fields, effectorial actuators, and interneuronal space. First of all, data from classic tables have to be transformed into the associative graph data structure (AGDS) to consolidate all duplicates for all attributes (parameters) separately. The example below demonstrates the final effect of such transformation made by ASSORT (associative sort or as-sort) algorithm that can be easy implemented. This kind of sort allows us to sort data tables simultaneously after all attributes (no indexing is necessary). The achieved graph can be easily transformed into the active associative neural graph (AANG) that is a kind of neural networks that allow us to trigger and recall simple association on the transformed data. The final graph structure consists of:

Either receptorial representation of attribute values or neural representation of objects (records/tuples) is not duplicated. All duplicates are consolidated and represented only once! This is one of the main strength of this associative structure. This feature allows it to appropriately associate similar objects because they are connected with each other by the same nodes (receptors or neurons) that represent shared values for separate attributes. Even if objects do not share the same values but their values for the same attributes are similar, such objects are closely connected because their similar attribute values are also connected and the way between them is very short. Moreover, when using neurons we need to neither evaluate equations nor to check conditions because neurons can propagate signals through connections taking into account the weights. The weights reflect similarities between values or the strength of defining objects. This structure can be used for quick finding out of similarities or differences between represented objects, groups of similar objects, discriminating attributes etc. More details can be found in the monography describing this algorithm in details.

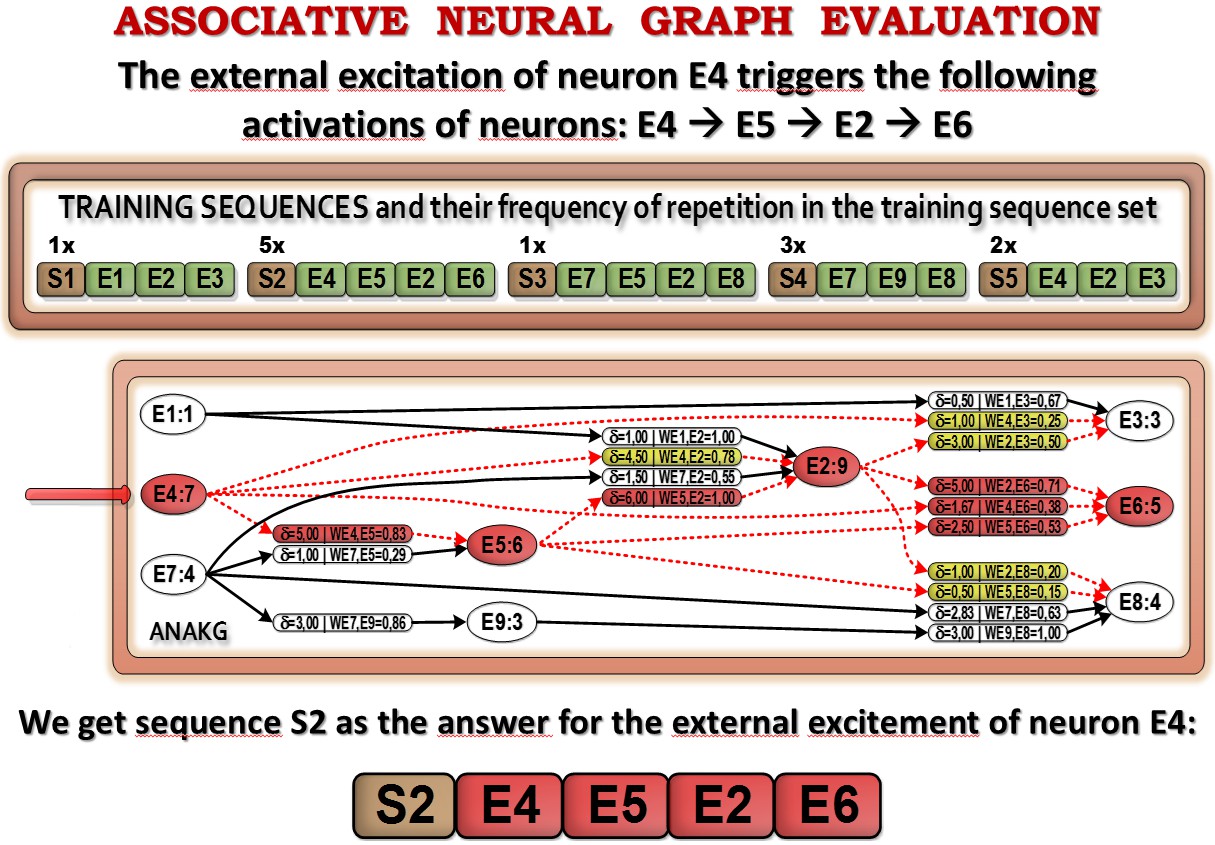

Artificial Associative Systems (AAS) can be also used to consolidate and associate training sequences. In this case the duplicates of either represented object or the sequences are consolidated. It enables us to associate many sequences in a single graph and take into account the contexts of following objects that come from these sequences.

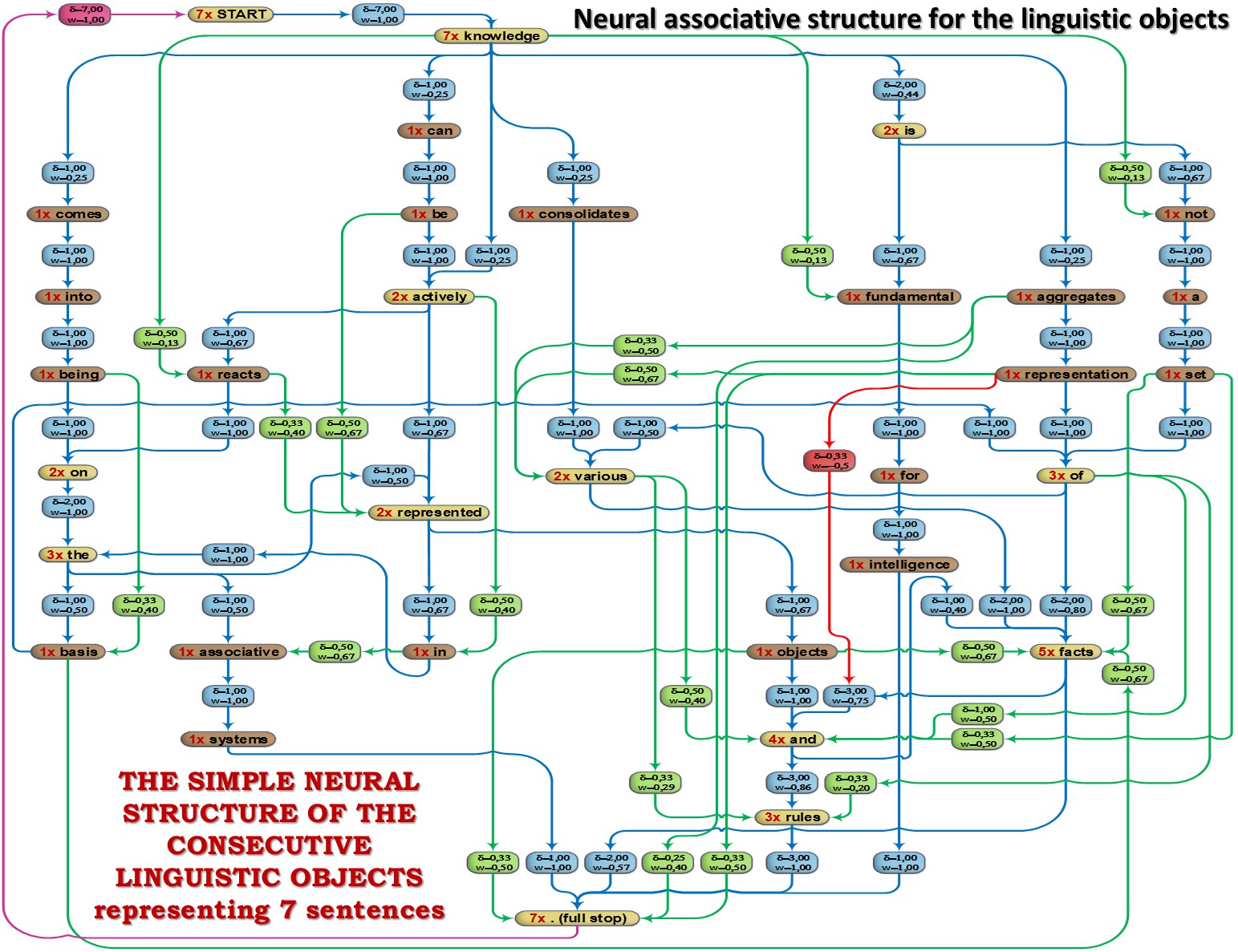

Thanks this kind of associative consolidation of sentences we can get very interesting behaviors of as-systems. For the example of seven following sentences:

we can get the following graph (some simplification have been made):

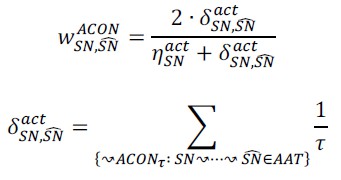

In order to compute appropriate weights for connected neurons there is necessary to determine the distance

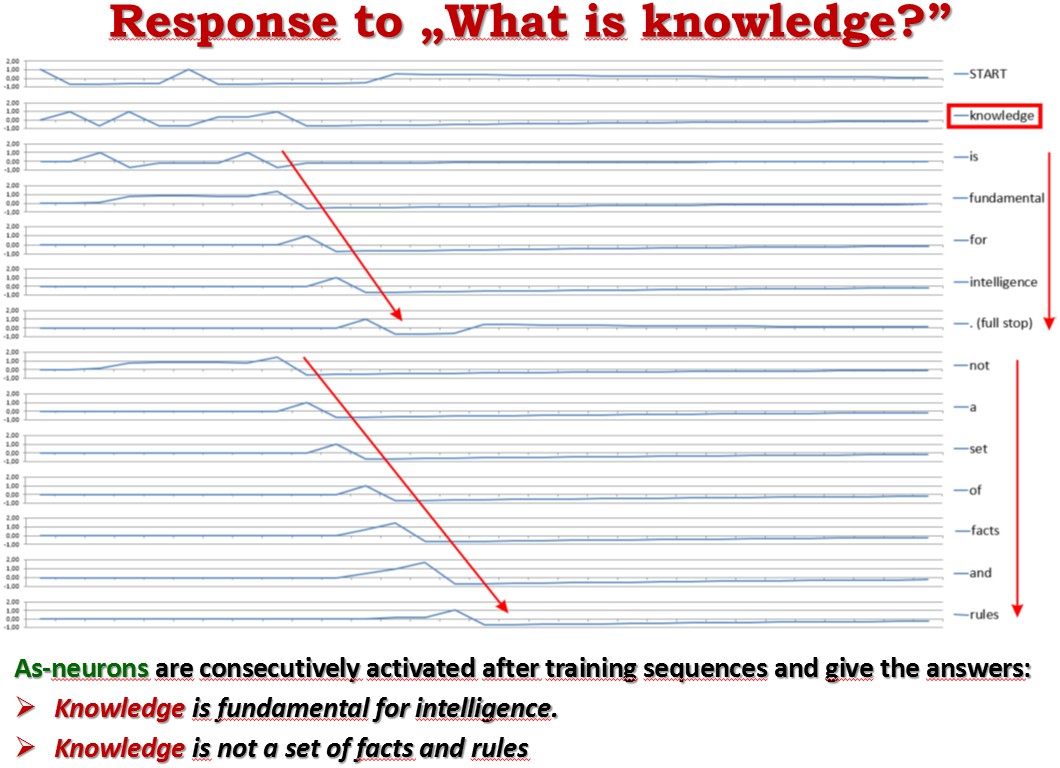

This neural graph enables us to externally trigger a selected group of neurons at once or in sequence and recall stored associations. The sequence of activated neurons corresponds to the answer given by the artificial as-system, e.g.:

For more details you can look through the monography describing artificial associative systems. Creativity of Associative SystemsAssociative systems (as-systems) are naturally creative because they are able to generalize facts and rules thanks to the consolidated representation of objects and their sequences. Interesting links and extra materials to study:

|